Why AI Ethics & Bias Guide Matters More Than Ever

Think about it you request a loan and are denied it is not due to your creditworthiness. But rather the workings of an algorithm that incorrectly assumed you were in an area with low credit ratings. Or consider that a resume of a qualified job applicant does not get to a human recruiter. As AI screening software has filtered them out based on subconscious AI Ethics and Bias.

Such situations are not imaginary. They are currently occurring and are greatly impacting lives of actual individuals.

It is no longer a luxury to know about AI ethics and bias as systems that utilize artificial intelligence are integrated into our life. We know it whether through the apps on our phone or our healthcare, employment & criminal justice systems. This AI Ethics & Bias Guide will assist you in finding your way through the complex world of responsible. AI as a curious amateur or a professional interested in applying ethical AI practice to your organization.

The stakes are easy to understand: Without an active intervention in bias and ethics in AI systems we will risk to automatize and increase the inequalities. we have been fighting to eliminate throughout our generations.

What is AI Ethics & Bias Guide?

The concept of AI Ethics & Bias Guide refers to the moral beliefs and norms that help to develop implement and use artificial intelligence systems. In a nutshell it puts the question. Do we necessarily have to create this AI system just because it is possible? And when we do how do we know that it is useful to the society and does not cause harm to the maximum?

AI Ethics & Bias Guide can be considered the rails that can keep innovation on course. It contains the following questions:

- What can we do to make AI systems fair to everyone?

- Who should shoulder the responsibility of the harmful choice by AI?

- To what extent is AI decision-making processes to be transparent?

- Which information can be ethical to gather and train AI?

Ethical AI does not simply mean adhering to rules but it is connected to developing technology that is respectful of human dignity promotes fairness and is in line with our core values.

Understanding Algorithmic Bias: The Hidden Problem

An algorithmic bias is the tendency of AI-based systems to generate the unjust results in a systematic manner, benefiting or harming specific groups of individuals. This is the most important detail that should be considered. AI systems will learn based on information & in case that information will represent the prejudices of the past, incomplete data or bias in perception, the AI will learn and carry those biases.

I will deconstruct the most important concepts:

Fairness in AI

Fairness implies that AI systems will not discriminate individuals or groups of people based on some of their protected attributes like race, gender, age, or socioeconomic status. However, the interesting bit is, fairness in fact has several different mathematical definitions and in some cases they are contradictory. A system that is fair in one way would be unfair in another way.

Explainability and Transparency.

AI transparency involves the ability to know how a system is making decisions. In cases where an AI gives you the runaround and declines to hand you a loan or even defend itself against your socially media post, you are entitled to some information. Transparent AI enables humans to question, comprehensively review, and audit automated decisions. That is also referred to as explainable AI or sometimes as explainable AI.

Accountability

Accountability implies the presence of definite responsibility in case harm was done by AI systems. Who is to blame when a driverless car creates an accident? The manufacturer’s? The programmer’s? The person who trained the AI? Setting up responsibility is a way of putting in place some actual consequences in deployment of harmful or prejudiced systems.

Common Types of AI Bias: Real-World Examples

Even AI bias is not a singular issue, this is a set of various problems that can creep up in systems at many points. Let us discuss the most widespread types using examples.

1. Historical Bias

This occurs when the AI uses the past discrimination or inequality data to learn.

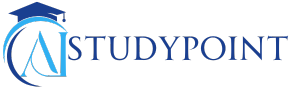

Example: Amazon used to come up with an AI resume screening tool. The system was self-taught to punish resumes with the word women in them (as in women chess club) as it was trained on ten years of historical hiring statistics which were mostly male-dominated. The AI basically came to know that the qualification to the job was that one was male. On learning about this bias, Amazon abandoned the project.

2. Representation Bias

This is because training data is not suitable to cover all the groups that AI will come across in the real world.

Example: The darker skin-colour and women have been reported to have much higher error rates than light-skinned men using facial recognition systems. Research conducted by MIT established that the error rates of dark-skinned women were up to 34 percent compared to under 1 percent among the light-skinned males. Why? The training data mostly consisted of white male images.

3. Measurement Bias

The information that we feed AI to train it is sometimes not measuring what we believe it is measuring.

Example: It would appear to be logical to use arrest records to forecast later criminal activity, but arrest is not the same as guilt, and in many cases, policing has been biased and responsible for arresting people. Over-policed communities will inevitably record higher arrest numbers, which will feed into more crime detected by the AI systems, resulting in increased policing, and escalating arrests.

4. Aggregation Bias

This happens when a one-size-fits-all model is unable to explain significant group differences.

Exanple: A diabetes prediction model may be trained on a diverse population data but diabetes does not present in a similar manner across ethnically diverse populations. A generalized model may be efficient with most of the population but not particular in detecting the condition among the minority population with varying risk factors or symptoms.

5. Evaluation Bias

In testing an AI system, because your benchmark data does not represent the diversity in the real world, you will miss issues.

Example: A voice recognition system has been tested extensively with native speakers of the English language may seem to have no issues until it gets implemented around the world and realizes that it cannot recognize non-native accents. Which literally locks out millions of potential users.

The Real-World Impact of Biased AI

The effects of AI bias have a much deeper impact than purely theoretical ones. They do have a tangible impact on the life, livelihoods and the basic rights of people.

Impact on Individuals

Healthcare: Medical AI is biased and may lead to a misdiagnosis or poor treatment suggestions of underrepresented populations. In a study conducted in 2019 an algorithm applied to more than 200 million patients in U.S. hospitals was determined to be much less likely to refer Black patients to additional care despite them being sicker than white patients.

Employment: Hiring tools that are automated have the ability to filter out competent candidates based on factors that are not related to the job performance. And this deprives individuals of chances based on latent biases.

Criminal Justice: In the wrong way, risk assessment tools implemented in the courts to estimate recidivism rates have been demonstrated to unjustly designate Black defendants as high-risk 2 times as often as white defendants, which could lead to sentencing, and parole choices.

Impact on Society

Bias AI systems may enforce and increase the existing social disparities. Even minor prejudices can be multiplied thousands or millions of times when such systems make thousands and millions of decisions every day, and the society is the victim of their work. This can:

- Continuing housing, lending and insurance discrimination.

- Restrict access to education and economic opportunities of the disadvantaged groups.

- Destroy faith in organizations with broken AI.

- Establish digital differences in which technology favors one group of people to another.

Impact on Businesses

Firms which implement biased AI run severe risks:

- Legal responsibility: Biased AI may be against civil rights laws and regulations.

- Reputation injury: Biased systems can be ruined through publicity.

- Lost revenue: Systems which fail to operate across whole demographic classes leave cash on the dollies.

- Scrutiny by the regulator: Governments across the world are enacting tougher AI laws.

AI Ethics , Bias Guide and Best Practices for Responsible AI

Creating an ethical AI is not merely a non-biased approach, but rather one should actively create systems that foster equity, candor, and responsibility. This is the way that organizations and individuals can become responsible. AI:

1. Varied and Multicultural Data.

What to do: This process should make sure that training data captures enough of all the populations that the AI will cater to. It is not just the amount of data that should be gathered, but quality data that should be gathered that is representative of the real world.

Practical advice: Then have data audits prior to training AI models. Question: Who is being represented in this data? Who is missing? Are there any historical biases to this data?

2. Multidisciplinary Development Teams.

What to do: The team should consist of individuals with varying backgrounds, views, and skill sets in AI development, not only engineers and data scientists but also ethicists, social scientists and members of the impacted groups.

The significance of this: Homogeneous teams are prone to blind spots. The various views allow unearthing the possible prejudices and ills that otherwise could remain unseen.

3. Periodical Bias Testing and Auditing.

What to do: AI systems should be tested to be biased towards various groups of people before implementation and continuously monitored once they are implemented. Select suitable fairness measures for your application.

Practical tip: Develop edge case and underserved group test cases. Do not use general measures of accuracy.

4. Transparency and Explainability

What to do: Check the design systems that are able to give human-understandable explanations of their decisions. Record the algorithm workings, data fed on, and constraints.

Why it is important: Trust is founded on openness and allows effective checks and balances. By knowing the reasoning behind an AI having made a decision, people will be able to contest unreasonable results.

5. Human Review and Checks and Balances.

What should be done: Humanize high-stakes decisions. Make sure that the individuals who are subject to AI decisions can do so meaningfully by appealing and recording.

Example: When AI refuses to provide a person with a loan, he/she must be given the opportunity to be reviewed by a human and to be explained what factors were used to make a decision.

6. Organizational Structures of Accountability.

What to do: Determine ownership of AI systems in their entire lifecycle, i.e., design and deployment, as well as monitoring. Develop systems of governance to have ethical results ownership.

Practical hint: appoint an AI ethics officer/committee, who would review high-risk AI applications prior to deployment.

7. Ethical Impact Assessments

What to do: Do proper evaluations of possible harms and gains of AI systems, particularly to vulnerable groups, before their implementation.

Consider it in the following way: A social and ethical impact statement is just like an environmental impact one.

Challenges in Implementing Ethical AI

Despite good intentions, there are considerable challenges to developing ethical AI:

Technical Complexity

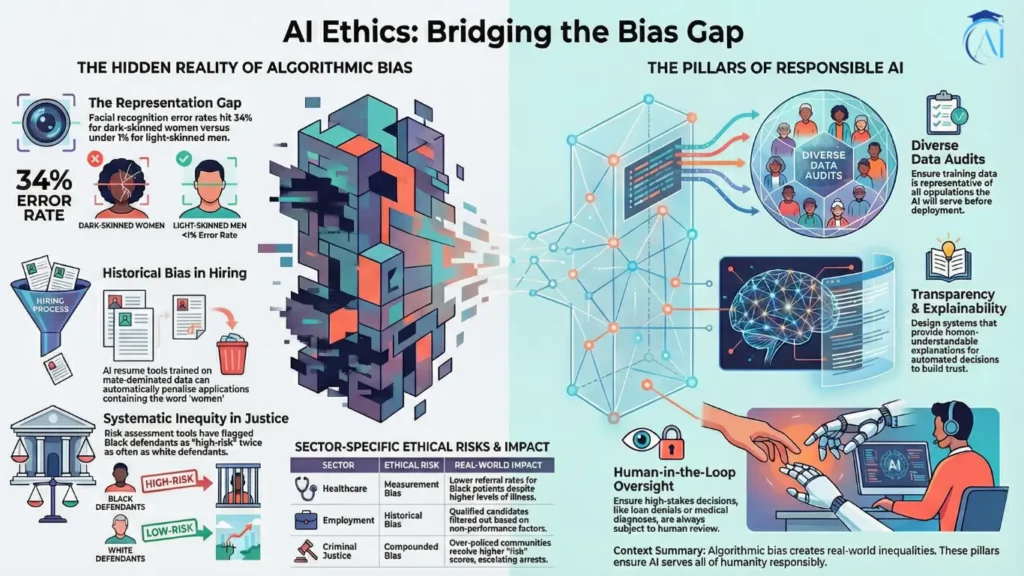

Bias is mitigated and hard to measure. No fairness button to push. The metrics of fairness may be antagonistic, and enhancing one population may decrease fairness for another. The special knowledge required by teams is in short supply.

Trade-offs among Accuracy and Fairness.

In other cases, fairness of AI systems can be achieved at the cost of reduced accuracy. The choice of trade-offs is a decision that organizations have to make, and in determining them, they need not only to think about trade-offs but also to think about them in an ethical sense.

Lack of Standardization

What is deemed as fair AI and its measurement are issues that do not have a consensus. Several industries, areas, and applications might demand diverse solutions. This non-standardization makes it hard to come up with one-size-fits-all solutions.

Business Pressures

The firms are under mounting pressure to implement AI fast to stay competitive. Comprehensive ethical checks and bias testing consume both time and resources, which places pressure on the speed to market versus responsible development.

Data Limitations

Organizations do not have access to high-quality training data and diverse data even in situations when they desire fair AI. Better data collection is a privacy issue since historical data may be discriminatory in the past.

Evolving Regulations

The AI regulatory environment continues to change. Companies must be able to maneuver around a maze of new legislations and regulations that differ jurisdictionally, and this is not an easy task to comply with.

Future Trends in AI Ethics & Bias Guide

The discipline of AI ethics is quickly developing. Here’s what’s on the horizon:

Greater Control: Governments across the globe are enacting AI-focused laws. An example of this is the AI Act by the EU, which classifies AI systems depending on the level of risk and provides stringent conditions regarding high-risk applications. More countries are likely to do the same.

AI Auditing Services: Fintech companies are also coming up with offerings of algorithm audits on bias and compliance as they audit financial systems. This is what will be the norm with high-stakes AI applications.

Fairness-Conscious Machine Learning: Some researchers are coming up with new methods of adding fairness provisions to AI code, such that ethical AI becomes a default position instead of a feature that needs to be spoken.

Increasing Transparency Requirements: There will be more demands on AI systems to justify their decisions, particularly in highly regulated domains such as finance, health, and criminal justice.

Participatory AI Design: An increasing number of organizations have been engaging communities that are affected in AI design, which means that systems are designed based on the values and needs of the people being affected.

AI Ethics Education: Universities and corporations are spending a lot of money on AI ethics education, realizing that in the future, developers must not only have technical competency but also possess good ethical backgrounds.

Conclusion: Building a Better AI Future Together

This AI Ethics & Bias Guide takes you from the fundamentals of responsible AI—from understanding what algorithmic bias looks like to implementing practical solutions. Key message? It is not a luxury or an afterthought, and that is why ethical AI is necessary to make technology useful to all.

The choices we make today concerning ethics and fairness will define the society we will be living in a few generations from now, as AI will become more powerful and more widespread. As much as you are creating AI systems, or implementing them in your organization, or just using AI-powered products, you have got a role to play when it comes to pressuring and enforcing ethical AI practices.

Keep in mind the following conclusions:

- Bias in AI is a result of biased information, improper design, and lack of complete insights—it is not unavoidable.

- In order to have ethical AI, it must be transparent, accountable, diverse in its teams, and constantly monitored.

- The technical and the moral necessity to construct the fair AI systems is a difficult task.

- It is in the best interests of every person to guarantee that AI works in the best interest of humanity.

The tendencies of AI in the future should not mirror those of the past. Through sensitivity, dedication, and proper practices, it is through awareness that we could develop artificial intelligence that is indeed intelligent, not only technically advanced but also intelligent enough to behave justly and with dignity towards every human being.

AI Ethics & Bias Guide – FAQ

A full-fledged guide to ethical values and bias problems in artificial intelligence, AI Ethics & Bias Guide is a useful resource to get familiar with the principles of ethics and bias. This guide is on how the AI systems can be rendered fair and transparent and how the algorithmic bias can be detected and minimized.

It is significant to read the AI Ethics and Bias Guide, as now AI is everywhere: in health care, employment, loans, and daily choices. With this guide, you are going to understand how prejudiced AI will have an impact on you and what ethical AI practices are. Whether you’re a beginner or a professional, this AI Ethics & Bias Guide is valuable.

The problem of AI bias happens when machine learning systems make unjust judgments that do not favor certain groups. This bias, according to the AI Ethics and Bias Guide, can be attributed to training data issues, designing algorithms, or assessments. Some of them are facial recognition that does not work with a particular skin tone or job-hunting technology that removes qualified candidates.

In this AI Ethics & Bias Guide, you will find these topics:

-The fundamental definitions of AI ethics.

-The various kinds of algorithmic bias.

-Real-world AI bias examples.

-AI implementation strategies ethics.

-The principles of fairness and transparency.

-Best practices of responsible AI.

-Future trends in AI ethics

The AI Ethics and Bias Guide expounds that AI fairness refers to equal and fair treatment for every demographic category. Nonetheless there are many mathematical definitions of fairness that may at times be inconsistent. Thus, context-related fairness metrics should be used.

The AI Ethics & Bias Guide explains that accountability means having clear lines of responsibility when AI causes harm. There should be a clear responsibility among the organizations, developers, and the data providers. The contemporary laws are holding companies accountable for the decisions their AI systems take.

According to the AI Ethics & Bias Guide, representation bias occurs when training data does not adequately represent all groups. Example: Facial recognition systems that are trained on images of mostly white males can be mistaken for up to 34 percent of dark-skinned women.

Reade More artical:- Artificial Intelligence Mastery